Pipeline Storage

This document outlines how a pipeline will store its outputs and how the API will reference those outputs.

Context

Snakemake

In Snakemake, the outputs of a rule are stored in the output directive.

This directive is a list of files that

Snakemakeexpects the rule will produce.If any of the files in the

outputdirective do not exist, the rule will be run.

Basic example

In the following example, running snakemake --cores 1 will result in looking for where output.txt is produced. If output.txt does not exist, the rule my_rule will be run.

Snakemake Storage Plugins

Snakemake has a plugin system that allows for the storage of outputs in different locations.

This is useful for storing outputs in a cloud storage system like

AWS S3orGoogle Cloud Storage.i.e The HTTP plugin will likely be used in the pipelines to retrieve

rawdatafrom publicly available sources.

The idea for the API is to take any pipeline and run it so that all the outputs are stored in a cloud storage system; Google Cloud Storage in this case.

This can be achieved by appending --default-storage-prodivder gcs to the snakemake command.

This requires a dedicated bucket for all the pipelines. For the sake of this example, the bucket will be called orcestra-data-public.

We would then append --default-storage-provider gcs --default-storage-prefix gs://orcestra-data-public to the snakemake command.

In the above example, this would then upload the output.txt to gs://orcestra-data-public/results/output.txt (assuming no errors occur).

API Reference to Outputs

The requirements for the API would be:

Establish a

Nameof each pipelineThis would be used in the

APIto reference the pipeline itself for the front-end (pipeline selection) and used to generate theGCS prefixfor the pipeline outputs.

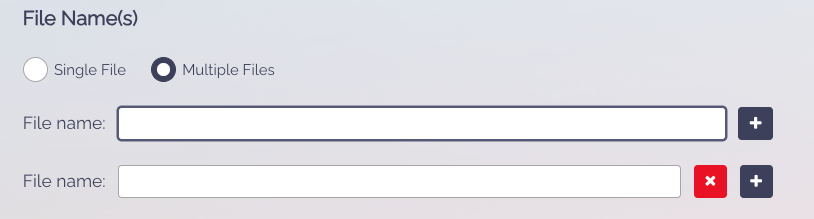

Upon submission of a pipeline, the user needs to specify

outputsby their relative paths to the root directory.This will then be used by the

APIafter the pipeline is run, to retrieve the outputs from theGCSThe

APIcan then expose the outputs to the user/front-end client

Like the previous orcestra implementation, except instead of just file name it should the relative path (s) to the root directory.

So if this is the given info (random example and not a real pipeline):

After the pipeline runs, the API will then retrieve the following files:

gs://orcestra-data-public/test_pipeline/results/pset.rdsgs://orcestra-data-public/test_pipeline/results/dnl.json

because the name of the pipeline is test_pipeline and the outputs are results/pset.rds and results/dnl.json.